Inferring representations of 3D scenes from 2D observations is a fundamental problem of computer graphics, computer vision, and artificial intelligence. Emerging 3D-structured neural scene representations are a promising approach to 3D scene understanding. In this work, we propose a novel neural scene representation, Light Field Networks or LFNs, which represent both geometry and appearance of the underlying 3D scene in a 360-degree, four-dimensional light field parameterized via a neural implicit representation. Rendering a ray from an LFN requires only a *single* network evaluation, as opposed to hundreds of evaluations per ray for ray-marching or volumetric based renderers in 3D-structured neural scene representations. In the setting of simple scenes, we leverage meta-learning to learn a prior over LFNs that enables multi-view consistent light field reconstruction from as little as a single image observation. This results in dramatic reductions in time and memory complexity, and enables real-time rendering. The cost of storing a 360-degree light field via an LFN is two orders of magnitude lower than conventional methods such as the Lumigraph. Utilizing the analytical differentiability of neural implicit representations and a novel parameterization of light space, we further demonstrate the extraction of sparse depth maps from LFNs.

The trouble with SRNs, NeRF & Co.

3D-structured Neural Scene Representations have recently enjoyed great success, and have enabled new applications in graphics, vision, and artificial intelligence. However, they are currently severely limited by the neural rendering algorithms they require for training and testing: Both volumetric rendering as in NeRF and sphere-tracing as in Scene Representation Networks require hundreds of evaluations of the representation per ray, resulting in forward pass times on the order of tens of seconds for a single, 256x256 image. This cost is incurred both at training and testing time, and training requires backpropagating through this extremely expensive rendering algorithm. This makes these neural scene representations borderline infeasible for use in all but computer graphics tasks, and even here, additional tricks - such as caching and hybrid explicit-implicit representations - are required to achieve real-time framerates.

Light Field Networks

We introduce Light Field Networks, or short LFNs. Instead of mapping a 3D world coordinate to whatever is at that coordinate, LFNs directly map an oriented ray to whatever is observed by that oriented ray. In this manner, LFNs parameterize the full 360-degree light field of the underlying 3D scene. This means that LFNs only require a single evaluation of the neural implicit representation per ray. This unlocks rendering at framerates of >500 FPS, and with a minimal memory footprint. Below, we compare rendering an LFN to the recently proposed PixelNeRF - LFNs accelerate rendering by a factor of about 15,000.

The geometry of light fields

Unintuitevly, LFNs do not only encode the appearance of the underlying 3D scene, but also its geometry. Our novel parameterization of light fields via the mathematically convenient plucker coordinates, together with the unique properties of Neural Implicit Representations, allows us to extract sparse depth maps of the underlying 3D scene in constant time, without ray-marching! We achieve this by deriving a relationship of an LFN's derivatives to the scene's geometry: at a high level, the geometry of the levelsets of the 4D light field encode the geometry of the underlying scene, and these levelsets can be efficiently accessed via automatic differentiation. This is in contrast to 3D-structured representations, which require ray-marching to extract any representation of the scene's geometry. Below, we show sparse depth maps extracted from LFNs that were trained to represent simple room-scale environments.

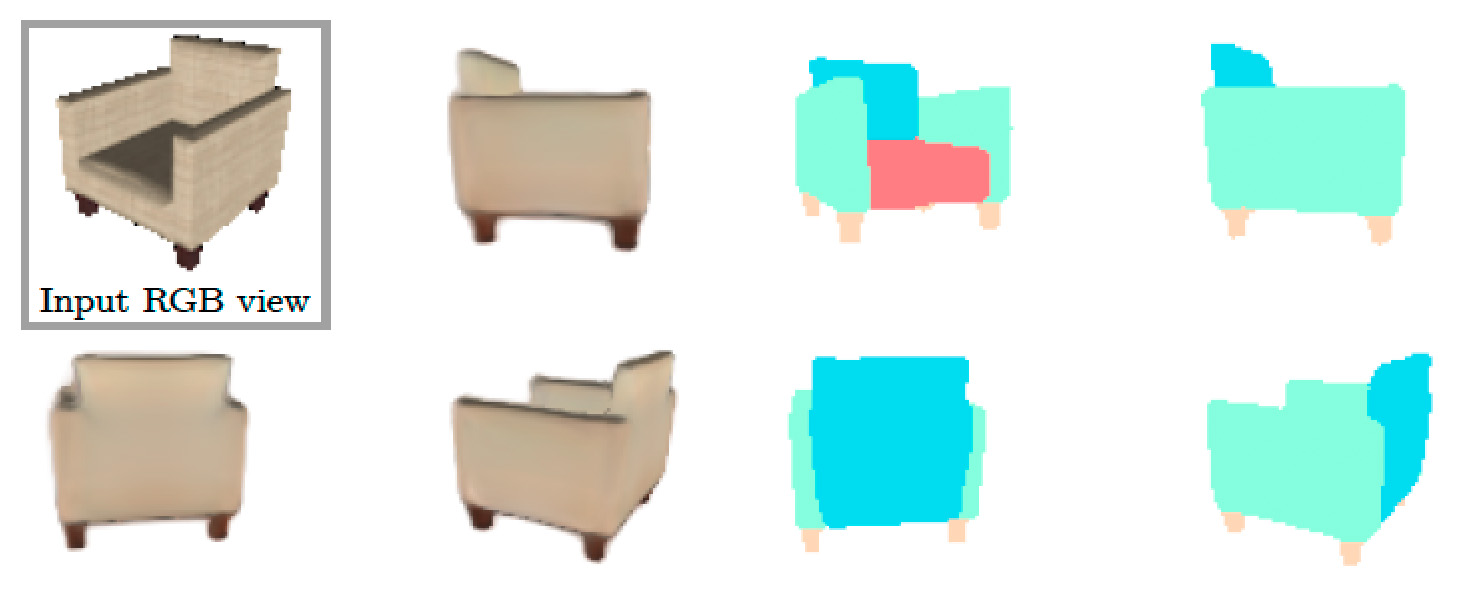

Meta-learning LFNs

While 3D-structured neural representations ensure multi-view consistency via ray-marching, Light Field Networks are not naturally multi-view consistent. To overcome this challenge, we leverage meta-learning via hypernetworks to learn a space of multi-view consistent light fields. As a corollary, we can leverage this learned prior to reconstruct an LFN from only a single image observation! In this regime, LFNs outperform existing globally conditioned neural scene representations such as Scene Representation Networks or the Differentiable Volumetric Renderer, while rendering in real-time and requiring orders of magnitude less memory.

Related Projects

Check out our related projects on neural scene representations!